THINGS-data: large-scale multimodal datasets for object representations in brain and behavior

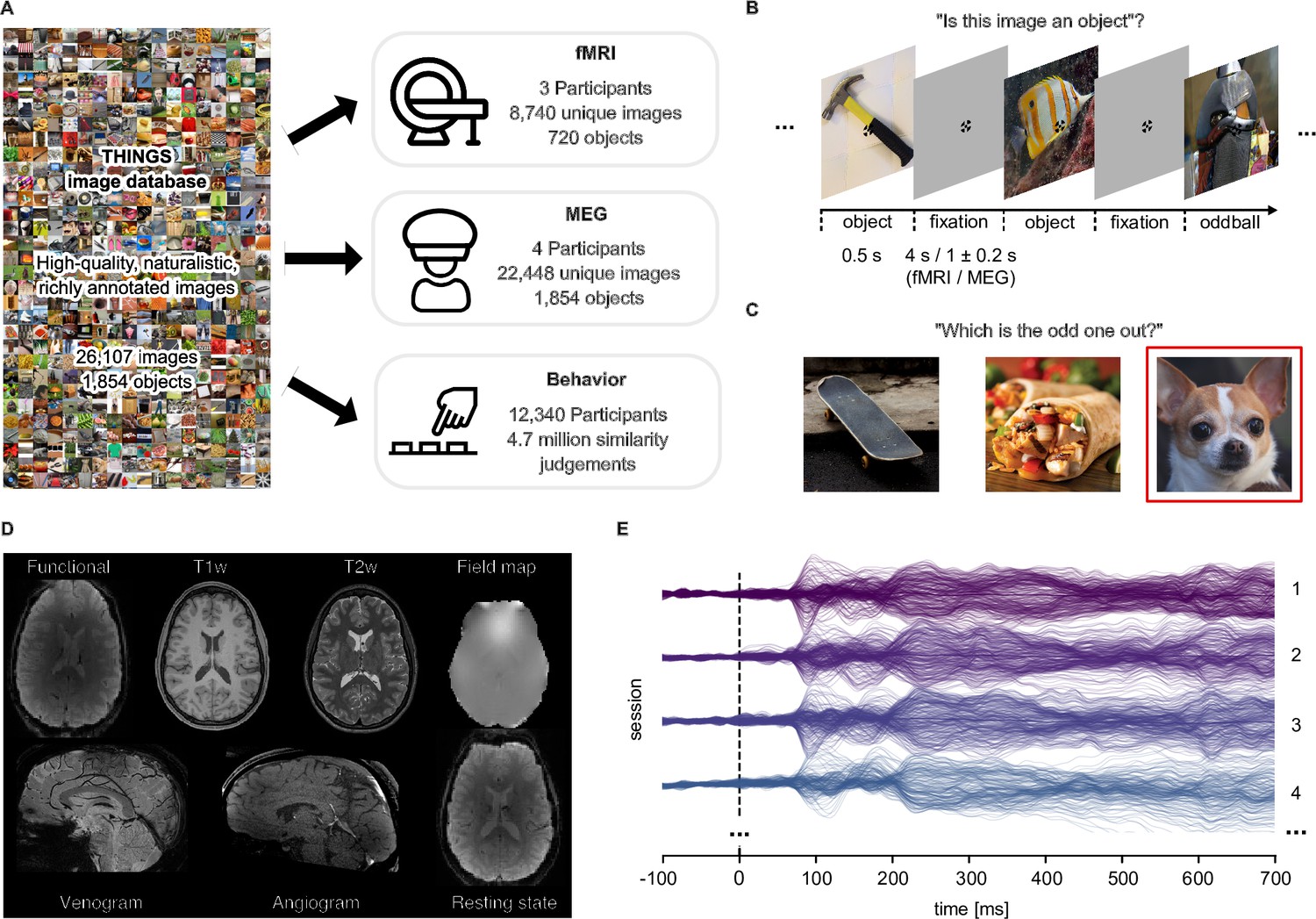

Understanding how the brain represents objects requires dense measurements across many objects and modalities. THINGS-data is a public collection of neuroimaging and behavioural data built for that purpose: large-scale brain responses with high spatial (fMRI) and temporal (MEG) resolution to thousands of natural object images, plus 4.7 million behavioural similarity judgments.

The fMRI dataset covers 720 object concepts (8,740 images) over 12 sessions per participant; the MEG dataset covers all 1,854 THINGS concepts (22,248 images). The behavioural dataset was collected via crowdsourced odd-one-out triplets and yields a 66-dimensional embedding of how people perceive object similarity. All data are openly available with code and derivatives (e.g. single-trial response estimates, ROIs, quality metrics).

THINGS-data is the core release of the THINGS initiative. It allows testing hypotheses at scale, replicating prior findings, and linking brain and behaviour across space and time—with an extensive and representative sample of objects.